From this week, I will try to cover the basics of Artificial Neural Network in a simple and intuitive way. Let's start with a brief history of the artificial neural network.

Brief history of neural network

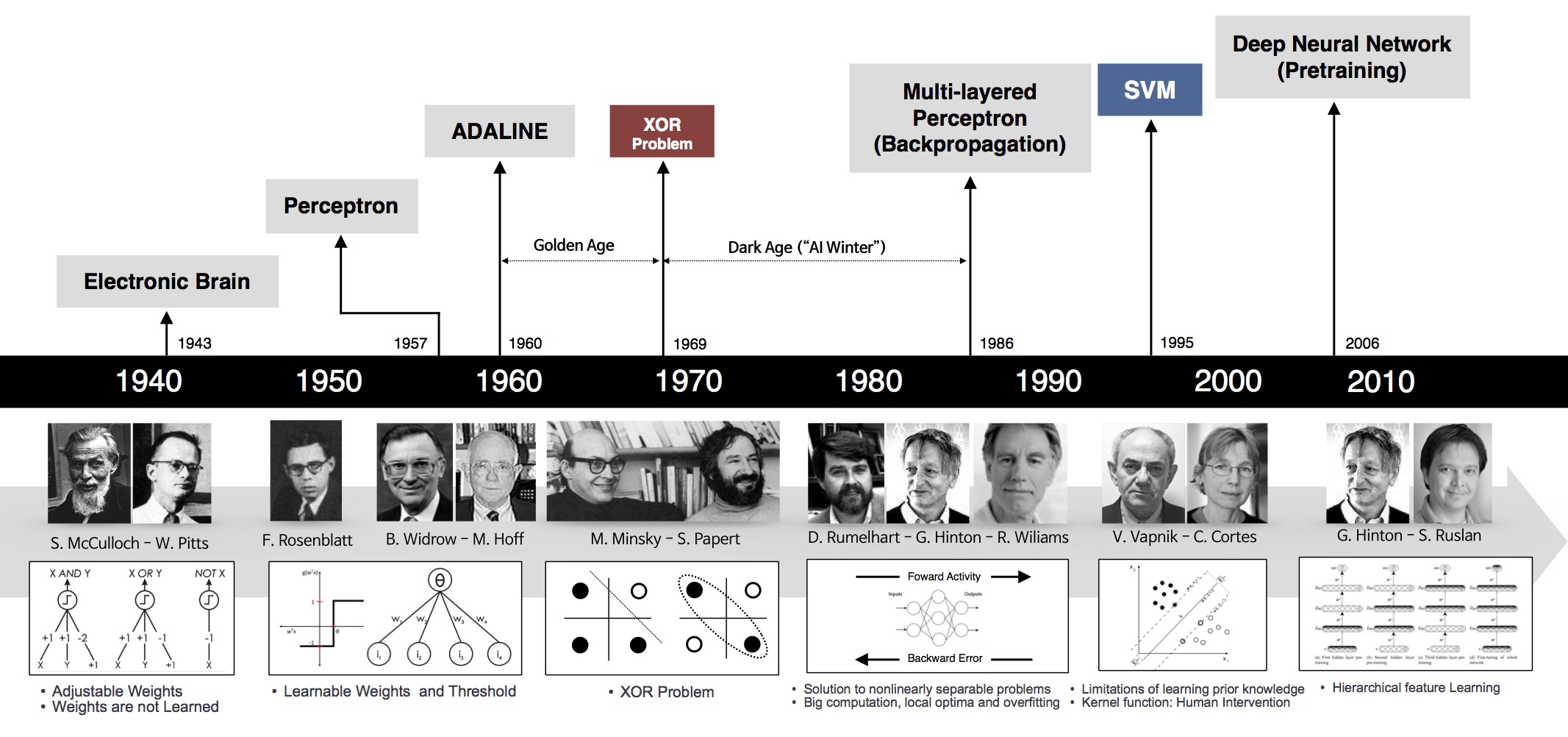

Since there are many good introductions already online, I will just list the following important periods, you can check out this nice blog for more - 'A Concise History of Neural Networks'. The important period for neural networks are:

1940s - The beginning of Neural Networks (Electronic Brain)

1950s and 1960s - The first golden age of Neural Networks (Perceptron)

1970s - The winter of Neural Networks (XOR problem)

1980s - Renewed enthusiasm (Multilayered Perceptron, backpropagation)

1990s - Subfield of Radial Basis Function Networks was developed

2000s - The power of Neural Networks Ensembles & Support Vector Machines is apparent

2006 - Hinton presents the Deep Belief Network (DBN)

2009 - Deep Recurrent Neural Network

2010 - Convolutional Deep Belief Network (CDBN)

2011 - Max-Pooling CDBN

1950s and 1960s - The first golden age of Neural Networks (Perceptron)

1970s - The winter of Neural Networks (XOR problem)

1980s - Renewed enthusiasm (Multilayered Perceptron, backpropagation)

1990s - Subfield of Radial Basis Function Networks was developed

2000s - The power of Neural Networks Ensembles & Support Vector Machines is apparent

2006 - Hinton presents the Deep Belief Network (DBN)

2009 - Deep Recurrent Neural Network

2010 - Convolutional Deep Belief Network (CDBN)

2011 - Max-Pooling CDBN

I also found this cool figure online, which shows clearly the progress of neural network:

What is neural network and how it works

First, how our brain works to recognize things? Too bad, no one knows the exact answer. Many researchers worked actively on this and try to find the truth. The past research already gave us much knowledge of how the brain works. In a much simplified version: in our brain, we have billions of neurons that do the computation to make the brain function correctly, like recognize apple or orange from some images, memorize certain things, making decisions, etc. These neurons are the basic element in the brain to process information. They connected to each other, and based on different stimulate from outside world, they can fire a signal to certain other neurons. The fired neurons will form different patterns to respond different situation, and this makes our brain can do a lot of amazing things. See the fired neurons in the following figure:

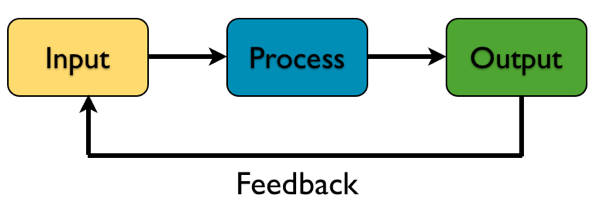

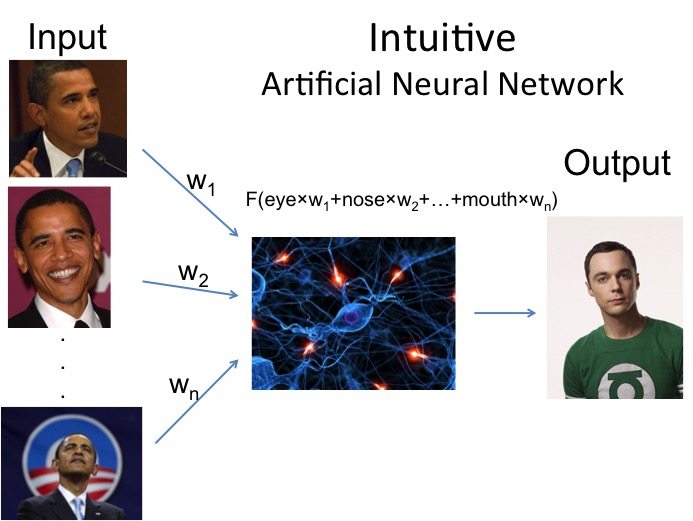

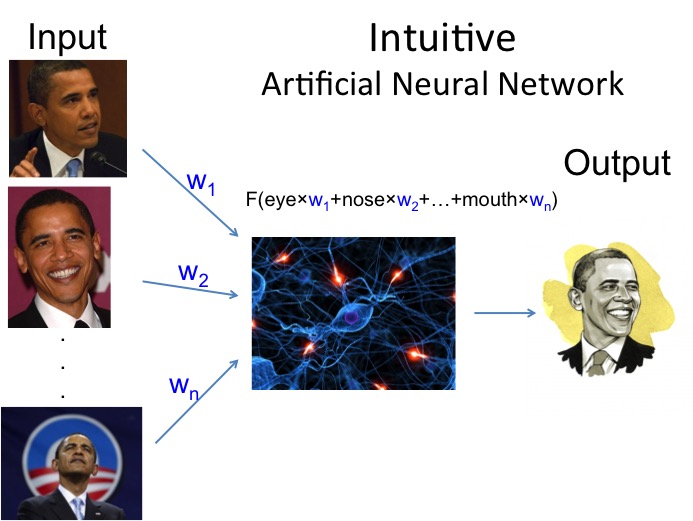

The Artificial neural network (ANN) is a mathematical tool to modeling the above brain process to certain degree (the truth is, we can only model the very basic things, and many more complicated processes that we even don't understand). It has the basic component of how the brain works: the neurons, the firing capability, the learning capability and so on. On a very high level, ANN can be simplified to the following model:

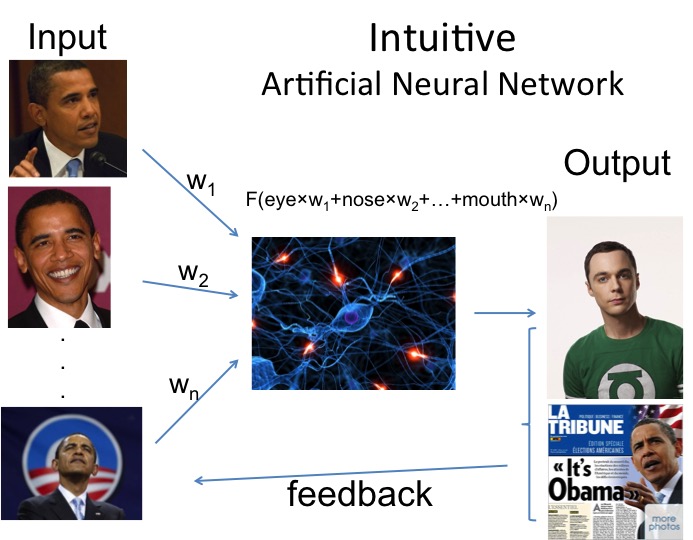

This simplified ANN model will have 4 parts: the input layer, the process layer, and the output layer plus a feedback process. Information first comes and enters into the system via the input layer. The ANN will process the input information and generate an output at the output layer which can be evaluated and give feedback to the input layer to adjust how the information enters into the system. Note that, this feedback and adjust the input is essentially the learning part of the ANN model, since doing this many times will adjust the model to fit the data well, a process we can think as learning from the data. For the ANN model, since we usually only see the input and output layer, and the process layer is kind of like a black-box to us, we usually call it, the hidden layer.

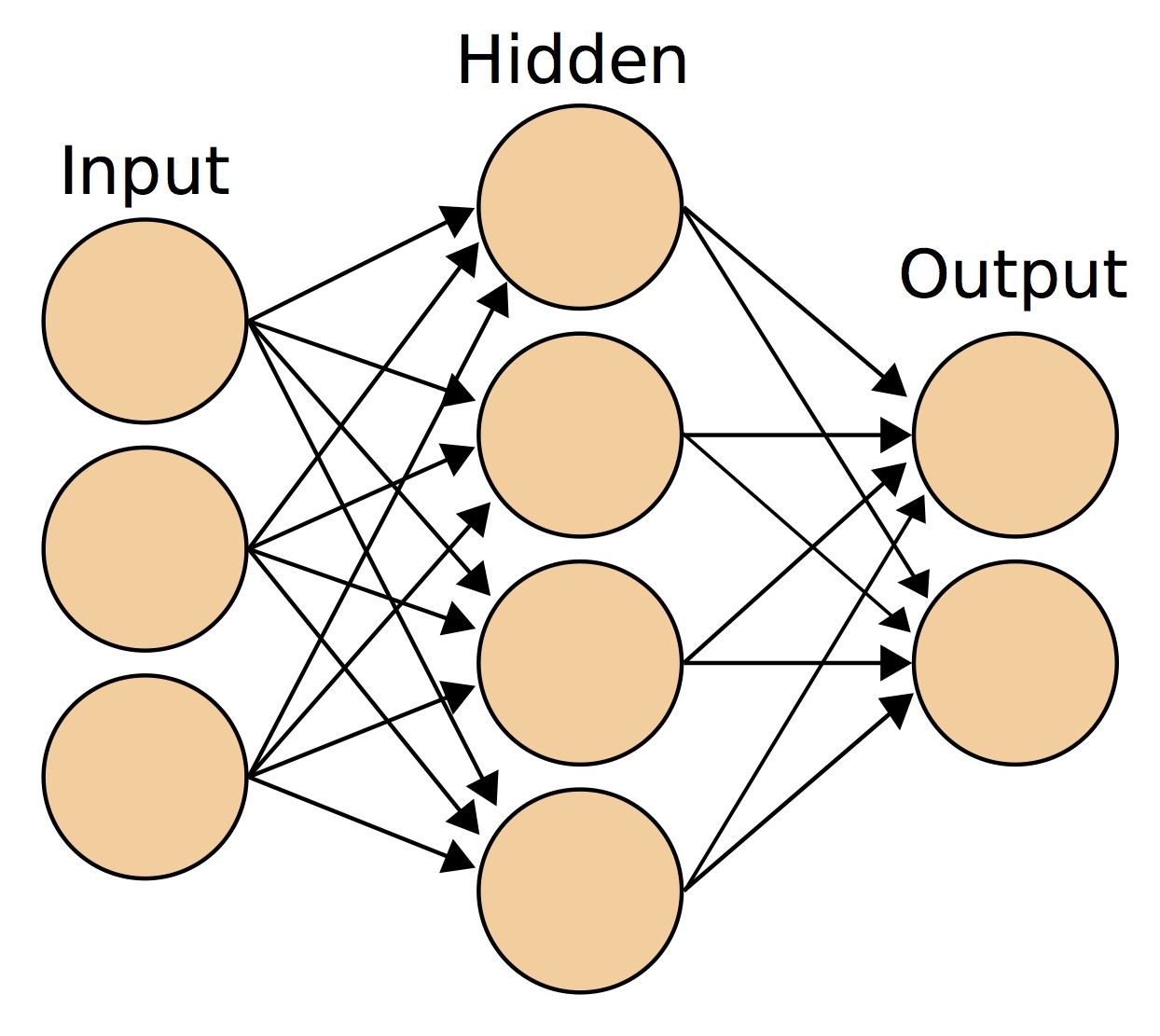

More formally, what you will usually see when study ANN is the following figure:

Likely, you already see the connection with the figure we just talked about. The input layer, hidden layer (process), output layer. Each of the input node (circle) will take one input and push it to the ANN model via the links (shown as the black arrows, they represent how strong the signals are from the input layer to each of the neurons) to the hidden nodes (we can also call it neurons in the hidden layer). The neurons in the hidden layer will process this information, and pass it to the output layer, again via the links. What's missing on this figure is the feedback process, which means we evaluate the output with the true results, and go back to adjust the strength of the links both in the input layer and output layer, to enhance some good data or to reduce some bad ones. Usually, we do this backward from the output layer to the input layer, this is also the reason it is called backpropagation.

Let's talk a little more about the information flow and the role of the links. The information enters into the ANN model from the input layer, and we can see each of the input nodes is actually connected with all the neurons in the hidden layer. Like we said before, the links represent the strength, we call them weights. We can think them as pipes connected input nodes and neurons. They will control how much information will pass through. The larger the weights (larger size of the pipe), the more information will pass from certain input node, vice versa. The same thing for the hidden layer to the output layer as well. These weights/links can control how important/much of certain information pass across the network. We can initialize the ANN model with small random weights (both postive and negative values), and after we evaluate the output results from the ANN model and the true results, we will find some input information is more important than others to get the results correct, while others will make things worse. Now for the information make the results correct, we can either not change the weights or change very little, but for the ones making things wrong, we will change the weights towards they contribute to the correct contribution. This is the role of the links or weights.

Simple Example

If you still confused with the above explanation, let's see a simple example to gain more sense how ANN works (This is not an accurate example to show how an ANN works, but it shows the most important part of the algorithm).

Let's say we have many pictures of president Obama and Dr. Sheldon Cooper. We want the ANN algorithm to recognize which picture is President Obama and which one is Dr. Sheldon Cooper.

Step 1 Information pass through network

The first step is to pass the information through the network, and we can see we have many pictures of president Obama to pass in the network. In order to recognize the picture, we need to find some clear characteristic features to make the classification. We can choose eye, nose, mouth, skin color, hair style, head shape, teeth, and so on as different features. In the hidden layer, we have n neurons, and you can see there are n links/weights connect these features to the neurons. Each of the features we select will have n links to all the neurons, and the neurons work like a big function that aggregate the information (this is shown as the function in the picture, F(eye*w1 + nose*w2 + ... + mouth*wn)). The generated output from the neurons in this case is Dr. Sheldon cooper. We can see the information from the input layer propagate through the whole network to the output layer.

Step 2 Information feedback

Now, we need to evaluate the results from the ANN. We can see the correct answer is president Obama. Clearly, the ANN algorithm made a mistake, and by comparing with the correct answer, we can get an error, which we will use as the basis to update the weights to reflect the fact that it made a mistake. This is the feedback process.

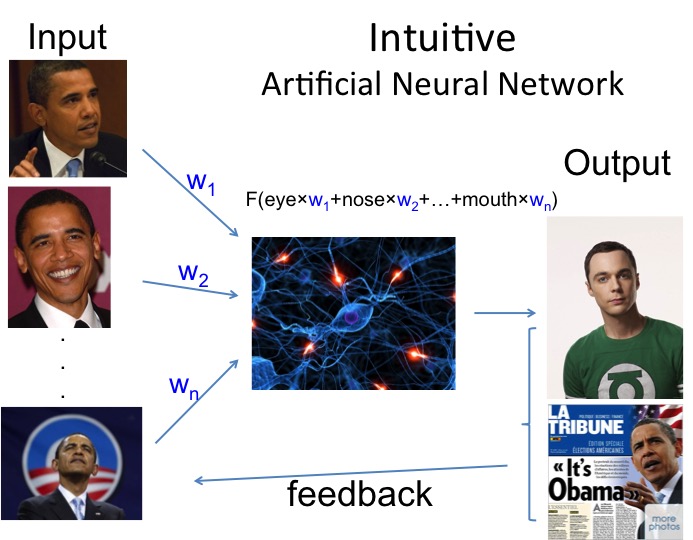

Step 3 Update weights

The errors from previous steps may be contributed by some features, for example, the shape of the head, or the hair style all confused the ANN to make the wrong decision. What we need to do is to update the weights associated with them so that to reduce the error. If the features can capture the difference, say, the skin color, then we may just update very small number to the weight associated with it, or even not update it. The blue color weights which reflect the facts that we updated them. With many data feeding into the network (training data) and updating the weights many times, the ANN will learn how to make the correct decision. Of course, the update is based on some rules we will talk in the next few weeks.

Step 4 Recognize correctly

After training the ANN with many data, the weights have already been updated to capture the difference from the images of this two persons. Therefore, when we pass through the images of president Obama, and we can get the correct answer out!

The example we show here just gives you a sense of how ANN works, and for more details, we will talk in the future posts, now you can check out the part 2 of this blog.

Acknowledgements

All the figures are from the internet, I thank the authors for the figures!

Nice overview article Qingkai - thanks!

ReplyDeleteLooking forward to the continuation in this series and how it relates to today's research and implementation of machine learning and deep learning technology into our lives.

Thanks Yair! Glad it useful! And I will try to update it slowly in the next few weeks.

DeleteNice Post, this is very impressive blog.

ReplyDeleteTableau Desktop Certification

Machine Learning Using Python

Data Analytics Courses In India

Updating weights is the core mechanism through which an artificial neural network learns. This process involves adjusting the numerical values associated with connections between neurons to minimize the difference between the network's output and the desired output.

DeleteDeep Learning Projects for Final Year Students

The Backpropagation Algorithm

The most common method for updating weights is backpropagation. It involves:

Forward pass: Input data is fed forward through the network, producing an output.

Error calculation: The error between the predicted output and the actual output is calculated.

Backward pass: The error is propagated backward through the network, adjusting weights layer by layer.

Image Processing Projects For Final Year

Weight update: Weights are updated using an optimization algorithm like gradient descent.

Machine Learning Projects For Final Year

The information which you have provided is very good. It is very useful who is looking for machine learning online training

ReplyDeleteGood Post. I like your blog. Thanks for Sharing

ReplyDeleteMachine Learning Course in Noida

Towards a single natural solution for global illumination:

ReplyDelete- GPUs 15 TFLOPS with improved branching

- Denoising using AI for real-time path-tracing

- Optimized early ray termination

- Voxel representation of geometry

- Auto Reflections

- Auto Shadows

- Auto Ambient Occlusions

Realism is what drives GPU advancement.

Imagine GPUs use outside of cinematic and gaming use cases.

LifeVoxel.AI has built a visualization and AI platform using GPUs in the cloud used in diagnostic-quality views for mission-critical care just using a web browser. This patented innovation is recognized by Frost and Sullivan, NSF and NIH (13 patents).

RIS PACS

RIS PACS software

This is amazing, very rare to find these type of blogs. Must say very well written.

ReplyDeleteMachine Learning

Thanks for sharing this valuable information and we collected some information from this blog.

ReplyDeleteMachine Learning Training in Noida

I was able to find good information from your articles.

ReplyDeleteUI Development Training in Bangalore

Reactjs Training in Bangalore

Such a very useful article. Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article.

ReplyDeleteCyber security Online Training

Machine learning 3 - Artificial Neural Networks - part 1- Basics.

ReplyDeletehttp://todayssimpleaiformarketing.com/

This is a fantastic website and I can not recommend you guys enough.artificial intelligence course in noida

ReplyDeleteBtreesystems Specializes in Offering Real-time Experience IT training on AWS, ..etc.. No.1 Software (IT) Training Institute in India

ReplyDeleteaws training in chennai

Python training in Chennai

data science training in chennai

hadoop training in chennai

machine learning training chennai

This blog is truly amazing we are the leading Weighbridge manufacturers

ReplyDeleteDevOps training in chennai - It's right time to learn the trending technolgy such as DevOps.Try to find a Best DeVops training Institute in Chennai.

ReplyDeleteblue prism training in Chennai - Blue prism is an developing and future technology of IT and start to start for Best Blue prism technology in Chennai.

uipath training in Chennai - Best uipath course and training in Chennai will also create a better path for your future.

microsoft azure training in chennai - Best azure training in Chennai offering the courses with guidance for Microsoft azure training.

ReplyDeleteWonderful post. Its really helpful for me..

Jeep Wrangler jl Dog Accessories

Jeep Grand Cherokee Pet Barrier

Machine Learning Course in Noida

ReplyDeleteI read that Post and got it fine and informative. Please share more like that...

ReplyDeletecertification of data science

Hello Dear..

ReplyDeleteI appreciate your Informative post and It's very helpful.thanks for sharing Keep it up!

Testing Equipment | Edge Crush Tester Digital | Bursting Strength Tester Digital

Standard visits recorded here are the simplest strategy to value your vitality, which is the reason why I am heading off to the site regularly, looking for new, fascinating information. Many, bless your heart!

ReplyDeletedata science training in noida

Thanks for sharing this Information. Machine Learning Course in Gurgaon

ReplyDeleteSuch a very useful information!Thanks for sharing this useful information with us. Really great effort.

ReplyDeleteartificial intelligence course in noida

Good information you shared. keep posting.

ReplyDeletedata analytics courses delhi

Thank you for the post! I just finished reading it up and am very excited to xelsis espresso machine the following series. Just wanted to let you know that your posts/thoughts/articles give me invaluable insights! I cannot really be thankful enough for all that you do! Currently finishing up your Narratives & Numbers as well. What a Gem as well!

ReplyDeleteFive weeks ago my boyfriend broke up with me. It all started when i went to summer camp i was trying to contact him but it was not going through. So when I came back from camp I saw him with a young lady kissing in his bed room, I was frustrated and it gave me a sleepless night. I thought he will come back to apologies but he didn't come for almost three week i was really hurt but i thank Dr.Azuka for all he did i met Dr.Azuka during my search at the internet i decided to contact him on his email dr.azukasolutionhome@gmail.com he brought my boyfriend back to me just within 48 hours i am really happy. What’s app contact : +44 7520 636249

ReplyDeleteThanks for sharing article with us.

ReplyDeleteAI Based Social Media Assistant

Sentiment Analysis

sandália rasteira bottero

ReplyDeletetende doccia milano amazon

tenis all star feminino branco couro

venda de cortina

nike air force modelo agotado

camisa ponte preta aranha

nike color block hoodie

sandalias courofeito a mao

sellerie moto strasbourg

robe voile manche longue une analyse

valentine gauthier chaussures

sacoche lv district

sfera vestido flecos mostaza

vestido de niña rosa palo

zapatos new balance para niños

sandalias agua cerradas

pulseira cartier feminina

eugenio campos pulseiras anjos

sandalia rafaela melo

organizzare armadio bimbi amazon

casacos da zara

zapatillas scalpers hombre outlet

scatola protezione stagna

scarpe nike air force modelli nuovi

cartera levis hombre

gucci handbags uk

nike air force alte zalando bianche bambini

zapatillas bimba y lola outlet

bolsos el potro segunda mano

nike free run 5.0 new

chaqueta adidas trefoil delantero

nike metcon 5 rojas

This is a wonderful article, Given so much info in it, These type of articles keeps the users interest in the website, and keep on sharing more ... good luck.

ReplyDeleteartificial intelligence training aurangabad

Thanks for the useful post.

ReplyDeleteFinal year projects in Pallikaranai Chennai

Python training in Pallikaranai Chennai

Data science training in Pallikaranai

Machine learning training in Pallikaranai Chennai

Bigdata training in Pallikaranai chennai

Deep learning training in Pallikaranai Chennai

Pytorch training in Pallikaranai Chennai

MongoDB Nosql training in Pallikaranai Chennai

Digital marketing training in Pallikaranai Chennai

Learn the top skills that get you hired successfully with Machine Learning Course in Hyderabad program by AI Patasala. Join us for a free webinar session.

ReplyDeleteMachine Learning Training in Hyderabad with Placements

beautiful blog thank you Philosophy Book

ReplyDeleteChildren Story Books

Vibhuti Online

Kumkum Online

Really awesome blog, informative and knowledgeable content. Keep sharing more stuff like this. Thank you.

ReplyDeleteData Science Course and Placements in Hyderabad

very nice blog thank you

ReplyDeleteTulsi mala

Puja Artiles

very nice blog thank you

ReplyDeleteAdithya hrudhaya Stotram

Astro Books

ReplyDeleteSAT Chemistry

MCAT Organic Chemistry Tutor

gcse organic chemistry

iit organic chemistry

Informative blog and knowledgeable content. Thanks for sharing this awesome blog with us. If you want to learn data science then follow the below link.

ReplyDeleteData Science Course with Placements in Hyderabad

Do not miss out on the tremendous potential that Machine learning automation brings into the table. Contact us today to make the best of the technologies that we have.

ReplyDeleteI am really enjoying reading your well written articles. It looks like you spend a lot of effort and time on your blog. I have bookmarked it and I am looking forward to reading new articles. Keep up the good work. data science course in jaipur

ReplyDelete데이트홈케어 데이트홈케어 데이트홈케어

ReplyDeletenice post..Fashion Design Course

ReplyDeleteFashion Designing Course In Madurai

dreamzone madurai

This comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteHand Bags types trending now in 2022. Leather Made bags for Sale in London Online.

ReplyDeleteThis comment has been removed by the author.

ReplyDeletenice blog, if you are looking for machine learning training in India? contact us

ReplyDeleteVery nice and very interesting Blog. This Blog is full of knowledge about manufacturing If you are looking for Injection Moulding Machine Manufacturers, then you choose Neelgiri Machinery and you can contact them by visiting their website.

ReplyDeleteIt's great to have a website like this to share information and keep us all connected. I'm so impressed with the layout of this blog.

ReplyDeleteNetwork Support Consultant

Network Support Consultancy London

Great information, thank you for sharing the article.

ReplyDeleteProject Based Learning

This comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThe Effective Lab India Salt Spray Chamber, a stalwart in corrosion testing, is a specialised testing instrument designed to simulate harsh environmental conditions, particularly the corrosive effects of salt-laden air. This invaluable tool aids in assessing the resilience of coatings, playing a pivotal role in ensuring the durability and performance of materials in various industries. By subjecting coatings to a controlled mist of saline solution, the chamber provides manufacturers with critical insights into how their products withstand corrosion over time. This not only enhances the quality control processes but also contributes significantly to industrial growth by fostering the development of more robust and enduring materials. The Salt Spray Test Chamber acts as a cornerstone in pursuing excellence, driving innovation and progress in diverse sectors, from automotive and aerospace to electronics and beyond.

ReplyDelete