Continue from last week's first 10 data science questions (part 1), here are the rest of the 10 questions:

Q11: What is selection bias, why is it important and how can you avoid it?

From Wikipedia, selection bias is the selection of individuals, groups or data for analysis in such a way that proper randomization is not achieved, thereby ensuring that the sample obtained is not representative of the population intended to be analyzed. There is a very interesting example on youtube.

From Wikipedia, selection bias is the selection of individuals, groups or data for analysis in such a way that proper randomization is not achieved, thereby ensuring that the sample obtained is not representative of the population intended to be analyzed. There is a very interesting example on youtube.

Selection bias can cause the researcher to reach wrong conclusion. I think the best way to avoid the selection bias is first to get to know the common bias and how it affects research. Here is a nice review of bias on evidence-based medicine. Though slightly different, a lot of the topics/concepts can be applied to other fields. as well.

Q12: Give an example of how you would use experimental design to answer a question about user behavior.I don't know how to answer this question, but will try based on my knowledge. To design an experiment to learn user behavior, I think it depends on the problem in hand. We need first understand the goal of the problem, i.e., what behavior we want to understand? And then find the factors that will affect the behavior. We then need brainstorm how to test these factors using experimental method.

Q13: What is the difference between

The long and wide format data are used to describe two different presentations for tabular data. In the long format, it is kind of like the key-value pairs to store the data, each subject (Iron man or Spider man) will have data in multiple rows. The wide format data, each subject will have all the variables in the same row but separated in different columns. It is easier to see in an example:

longand

wideformat data?

The long and wide format data are used to describe two different presentations for tabular data. In the long format, it is kind of like the key-value pairs to store the data, each subject (Iron man or Spider man) will have data in multiple rows. The wide format data, each subject will have all the variables in the same row but separated in different columns. It is easier to see in an example:

long format

| Name | Variable | Value |

|---|---|---|

| Iron man | Color | red |

| Iron man | Material | iron |

| Iron man | Power | nuclear |

| Iron man | Height | 5'8" |

| Spider man | Color | red |

| Spider man | Material | cloth |

| Spider man | Power | food |

| Spider man | Height | 5'10" |

wide format

| Name | Color | Material | Power | Height |

|---|---|---|---|---|

| Iron man | red | iron | nuclear | 5'8" |

| Spider man | red | cloth | food | 5'10" |

The wide format data usually will have problems when you want to visualize it with many variables. But it is very easy to convert between these two types data using python or R.

Q14: What method do you use to determine whether the statistics published in an article (e.g. newspaper) are either wrong or presented to support the author's point of view, rather than correct, comprehensive factual information on a specific subject?

I think all the statistics used in newspaper or TV is to support the author's point. This is illustrated in the book How to lie with Statistics.

I think all the statistics used in newspaper or TV is to support the author's point. This is illustrated in the book How to lie with Statistics.

It is difficult to tell whether the statistics is wrong, since we need reproduce the results if we are facing the question and want to solve it by ourselves. But many times, we can not re-do the work, so I don't have a better way to determine if the statistics are wrong.

Q15: Explain Edward Tufte's concept of

If you don't know Edward Tufte, then you should really get to know him and his famous books (see his webpage). I own 4 of his books - The Visual Display of Quantitative Information, Envisioning Information, Visual Explanations: Images and Quantities, Evidence and Narrative, and Beautiful Evidence. All of these books are great. His most classic book is 'The Visual Display of Quantitative Information', which will make your data visualization goes up to a new level. This

chart junk.

If you don't know Edward Tufte, then you should really get to know him and his famous books (see his webpage). I own 4 of his books - The Visual Display of Quantitative Information, Envisioning Information, Visual Explanations: Images and Quantities, Evidence and Narrative, and Beautiful Evidence. All of these books are great. His most classic book is 'The Visual Display of Quantitative Information', which will make your data visualization goes up to a new level. This

chart junkis from this book:

The interior decoration of graphics generates a lot of ink that does not tell the viewer anything new. The purpose of decoration varies — to make the graphic appear more scientific and precise, to enliven the display, to give the designer an opportunity to exercise artistic skills. Regardless of its cause, it is all non-data-ink or redundant data-ink, and it is often chartjunk.

What he means is the elements in the charts are unnecessary to convey the main information, or distract the viewer. See the following example from WiKi:

Q16: How would you screen for outliers and what should you do if you find one?

An outlier is an observation that is distant from the other observations. It may from two cases, (1) measurement error or (2) heavy tailed distribution. In the first case, we want to discard the outlier, but in the later case, it is part of the information that need special attention to.

An outlier is an observation that is distant from the other observations. It may from two cases, (1) measurement error or (2) heavy tailed distribution. In the first case, we want to discard the outlier, but in the later case, it is part of the information that need special attention to.

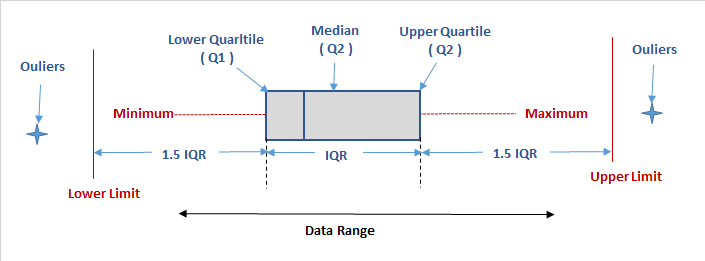

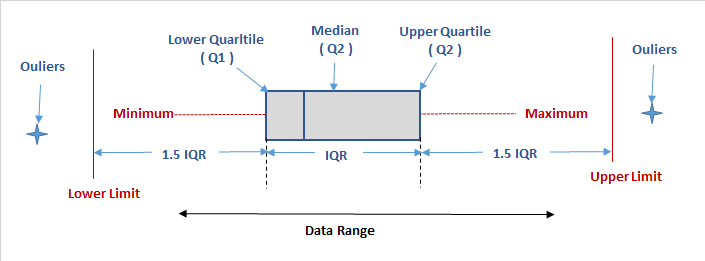

The way I usually to find an outlier is using the box plot, and if the data point is outside of the 1.5*IQR, I will treat it as an outlier. See more details here.

There are more discussions whether to drop the outliers here.

Q17: How would you use either the extreme value theory, Monte Carlo simulations or mathematical statistics (or anything else) to correctly estimate the chance of a very rare event?

I don't know the extreme value theory, so will talk using the Monte Carlo simulations to estimate the chance of a very rare event.

I don't know the extreme value theory, so will talk using the Monte Carlo simulations to estimate the chance of a very rare event.

A very rare event is an event occurring with a very small probability. So if you use Monte Carlo directly, it will be really inefficient for sampling the rare events. For example, if the instance occur at the probability 10-9, then on average, we need a sample of size n = 109 to observe just a single occurrence of the event, and much more if we expect a reliable estimation of the mean and variance to obtain a sufficiently narrow confidence interval.

Importance sampling (IS) is a powerful tool to reduce the variance of an estimator, that is increasing the occurrence of the rare event. The basic idea of importance sampling is to change the probability laws of the system under study to sample more frequently the events that are more important for the simulation. Of course, using this new distribution results in a biased estimator if no correction is applied. Therefore the simulation output needs to be translated in terms of the original measure, which is done by multiply a so-called likelihood ratio. See the details in chapter 2 of Rare Event Simulation using Monte Carlo Methods by Gerardo Rubino and Bruno Tuffin.

Q18: What is a recommendation engine? How does it work?

A recommendation engine is a system that can predict a user's

A recommendation engine is a system that can predict a user's

ratingor

preferencebased on the user's activities or other users' activities. One easy example is Amazon, when you browse the books, you always see 'Recommend books for you', and 'Other people may like this'. This is Amazon recommendation engine based on your browse history and other people's browse history.

There are two common approaches: Collaborative filtering and Content-based filtering.

Collaborative filtering

This approach is based on collecting and analyzing a large amount of information on users' behaviors, activities or preferences and predicting what users will like based on their similarity to other users (from WiKi). So the assumption here is that similar people tend to have similar behavior, if we know their past behaviors, we can predict their future behavior. For example, I love machine learning, always browse interesting machine learning books online. Another person also loves machine learning, and he has another set of books he usually browse. So if the system recommend a book I love from me about machine learning to this person, there's a high chance this person will love it as well.

This approach is based on collecting and analyzing a large amount of information on users' behaviors, activities or preferences and predicting what users will like based on their similarity to other users (from WiKi). So the assumption here is that similar people tend to have similar behavior, if we know their past behaviors, we can predict their future behavior. For example, I love machine learning, always browse interesting machine learning books online. Another person also loves machine learning, and he has another set of books he usually browse. So if the system recommend a book I love from me about machine learning to this person, there's a high chance this person will love it as well.

Therefore, in order to use this approach, the first thing we should do is to collect a lot of data from different users, and use them as the basis to predict future user behavior. The advantage of this method is that, we even don't need know the content of the product, all we need is collecting large dataset about users' activities. On the other hand, this is also the disadvantage of this approach, since we have to first collect a large dataset to make this approach work. Therefore, when you start a new business, you may need wait for a few years to start to recommend product to your users.

Collaborative filtering In contrast, the collaborative filtering approach is based on understanding of your products and the profile of your user, i.e., information about what this user likes. Then the algorithm will recommend products that are similar to those that a user liked in the past. For example, I love Iron man movies, I watched Iron man I, II, III in the past. Therefore, if the algorithm recommends me a movie 'I, Robot', there's a high chance I will love it (of course, both are my favorite). But if the algorithm recommends me a love movie, I would say, there's still room to train the algorithm to be better.

Collaborative filtering approach doesn't need collect large dataset as the first step. Instead, you can start to recommend product to your users from the very beginning after he starts to choose your product. But you can see that, you now have to learn the characteristics about your product, this is the trade!

Hybrid model Hybrid is always the best )^ We can also combine the two methods together, this is the Hybrid recommend system: by making content-based and collaborative-based predictions separately and then combining them; by adding content-based capabilities to a collaborative-based approach (and vice versa); or by unifying the approaches into one model.

Q19: Explain what a false positive and a false negative are. Why is it important to differentiate these from each other?This question seems duplicate with question 4 and 10, so I will skip this one.

Q20: Which tools do you use for visualization? What do you think of Tableau? R? SAS? (for graphs). How to efficiently represent 5 dimension in a chart (or in a video)?

Since I am mostly using python in my daily life and research, so all the visualization tools are in python.

Since I am mostly using python in my daily life and research, so all the visualization tools are in python.

Here are the ones I use most:

- matplotlib, most powerful and flexible package that can plot nice figures. A lot of the figures I generated for the papers are from it.

- pandas, is not only an analysis tool, but the plotting capability is also really great.

- Seaborn, I use it mostly for the quick nice plot style. Since the new matplotlib added the different styles, I guess I will use it less.

- Bokeh, a very nice interactive plot package. I usually use it to generate the html interactive file for easily passing around.

- Basemap, this is what I usually use for plotting maps.

- cartopy, a nice package for quick map plotting.

- folium, my favorite interactive map plotting package built on top of leaflet.

Here are some more nice package, I occasionally use.

* geoplotlib, a toolbox for visualizing geographical data and making maps.

* mpld3, another interactive plotting package that built on top of D3.

* pygal, another interactive plotting package. It can output figures as SVGs.

* ggplot, plot based on R's ggplot2.

* datashader, can create meaningful representations of large amounts of data.

* missingno, a package to deal with missing or messy data.

* geoplotlib, a toolbox for visualizing geographical data and making maps.

* mpld3, another interactive plotting package that built on top of D3.

* pygal, another interactive plotting package. It can output figures as SVGs.

* ggplot, plot based on R's ggplot2.

* datashader, can create meaningful representations of large amounts of data.

* missingno, a package to deal with missing or messy data.

I never used Tableau, R, SAS before, so I cannot say anything about them.

For plotting high dimensional data, parallel coordinates is a popular option.  .

.

.

.

Or the radar chart is another option. Pandas can be used to plot the parallel coordinates.

Instead of plotting them directly, I usually first see if I can explain most of the data in lower dimensions, say 2 or 3 dimension by using Principle Component Analysis.

There is a very nice discussion on Quora - 'What is the best way to visualize high-dimensional data'

Hello,

ReplyDeleteThe Article on 20 Data science questions is nice.It give detail information about it .Thanks for Sharing the information about it.It's amazing to know about Data Science Questions. data science consulting

https://www.smeclabs.com/data-science-course-kochi/

DeleteNice tutorial. Thanks for sharing the valuable info about Data Science Training. it’s really helpful. Keep sharing on updated tutorials…..

ReplyDeleteYour very own commitment to getting the message throughout came to be rather powerful and have consistently enabled employees just like me to arrive at their desired goals.

ReplyDeleteData science training in Bangalore

Data science online training

ReplyDeleteYou have discussed an interesting topic that everybody should know. Very well explained with examples. I have found a similar website

data science consulting visit the site to know more about Omdata.

Awesome post. You Post is very informative. Thanks for Sharing.

ReplyDeleteData Science Course in Noida

I would definitely thank the admin of this blog for sharing this information with us. Waiting for more updates from this blog admin.

ReplyDeletedata science training in chennai

data science training in tambaram

android training in chennai

android training in tambaram

devops training in chennai

devops training in tambaram

artificial intelligence training in chennai

artificial intelligence training in tambaram

You have discussed an interesting topic that everybody should know. Very well explained with examples. I have found a similar website

ReplyDeleteweb designing training in chennai

web designing training in omr

digital marketing training in chennai

digital marketing training in omr

rpa training in chennai

rpa training in omr

tally training in chennai

tally training in omr

Wonderful article, Which you have shared about the igmguru.com. Your article is very important and I was really interested to read it. If anyone looking for data science with python certification then visit igmguru.com, It’s the best choice.

ReplyDeleteFive weeks ago my boyfriend broke up with me. It all started when i went to summer camp i was trying to contact him but it was not going through. So when I came back from camp I saw him with a young lady kissing in his bed room, I was frustrated and it gave me a sleepless night. I thought he will come back to apologies but he didn't come for almost three week i was really hurt but i thank Dr.Azuka for all he did i met Dr.Azuka during my search at the internet i decided to contact him on his email dr.azukasolutionhome@gmail.com he brought my boyfriend back to me just within 48 hours i am really happy. What’s app contact : +44 7520 636249

ReplyDelete

ReplyDeleteTraining Data Sets for AI and Machine learning Engines

Training Data Sets for World’s Leading AI and Machine Learning Companies.Cognegica Networks was a dream realized early in 2018 as an enterprise that amalgamated technology with rural development.

to get more - https://www.cognegicanetworks.com/

Tree maps are a great alternative to pie charts when you have a lot of data to present, but they're not easy to design. In this post, we'll look at examples of poor tree maps and how to improve them with small changes, whether that means adjusting the colors or fonts, using more space, or adding a few simple details to your tree maps.

ReplyDeleteNice informative content. Thanks for sharing the valuable information.

ReplyDeleteNode Js Development

Node Js Framework

I really enjoy the blog article.Much thanks again.

ReplyDeleteData Science Course

Best Data Science Online Training in Hyderabad

nice post.

ReplyDeletedata science online free

Best Data Science Online Training

https://kodebro.com/data-science-course-kochi

ReplyDeleteThank you for providing this blog really appreciate the efforts you have taken into curating this article. BookMyEssay provides reliable online assignment help for N2 Lewis dot structure assignment help online. Their expert team offers assistance in understanding and completing the assignment effectively, ensuring an accurate representation of the molecule's electron configuration.

ReplyDelete