I recently saw the 20 Questions to Detect Fake Data Scientists on KDnuggests. Too bad, after I went through the question lists, I found a lot of them I don't know how to answer, or only can answer partially. Results: I am a fake data scientists !!! :-). I don't think these 20 questions can detect whether a data scientists is fake or not, I even think the questions has the selection bias (one of the following question). Anyway, I feel fun to answer them, here are my answers, and if you use them to test me again, you will treat me as a genuine data scientists :-) I put these answers here more like a references/summary for me, if you spot any mistakes, please let me know to make me a better data scientists. Because of the answers are so long, I split this into two posts, here is the first 10 questions. Here is part 2 of the questions.

- Explain what regularization is and why it is useful.

A lot of the machine learning problems can be generalized into minimize a loss function to reduce the difference the model output and the true results. My understanding of the regularization is to add more constrains to the loss function to make sure that the parameters of the model can not change freely. This is in a sense reduce the flexible of the model, which means we will increase bias, but reduce variance in the error (to understand the bias and variance trade-off, check out this great blog here). If we choose a reasonable regularization, we will get a nice trade-off between the bias and variance, and reduce the overfitting of the unwanted signal (noise) in the data.Let's use Lasso (L1 norm) or Ridge (L2 norm) regression as an example. As we know, Lasso works by setting some of the parameters to very small number, or even zero, which means it is doing an internal feature selection to give you a subset of the features to work with. This small set of features makes your model less flexible, but less sensitive to the changes of the data (reduce the variance). Ridge regression is similar, but different in that it can not set the parameters to zero (it can set the parameters really small though). This is means it gives less weight on some of the parameters, in other words, treat them as nothing. It achieves the same goal of making the model less flexible to reduce the overfitting. - Which data scientists do you admire most? which startups?

The following is a short list of my hero in this field (no particular order, I just randomly think who I will add here), it may different from yours, or even missing some big figures. But remember, this is my list!Geoff Hinton, from University of Toronto, who is one of the main force to push neural network, and deep learning forward. I especially love this video - The Deep Learning Saga

Michael Jordan from UC Berkeley. Just look at the list of his past students and postdocs, you will realize how many great experts he taught before! I took his class before, but at that moment, I can not understand all!

Trevor Hastie and Robert Tibshirani I learned most of my machine learning knowledge from their books - An introduction to Statistical Learning and The Elements of Statistical Learning. If you don't know who invented Lasso method we just talked above, google it!

Andrew Ng, from Stanford. Most people knows Andrew probably from the Coursera machine learning course. Recently, he is very active in machine learning field.

Tom M. Mitchell, from Carnegie Mellon University. I first know him is from his book machine learning, a very readable introduction.

Yann LeCun, another driving force in deep learning. He is best known for his contribution in the convolutional neural networks.

Christopher Bishop, from University of Edinburgh. He was trained as a physicists, but then later became an expert in machine learning. His book Pattern Recognition and Machine Learning is another must have if you want to learn machine learning.

Yaser S. Abu-Mostafa, Caltech. I took his learning from data online, and it is my first step in machine learning!

Jake VanderPlas, University of Washington. I used so many tutorials and slides from him, thanks! - How would you validate a model you created to generate a predictive model of a quantitative outcome variable using multiple regression.

I usually use cross-validation or bootstrap to make sure my multiple regression works well. For determine how many predictors I need, I use adjusted R2 as a metrics, and do a feature selection using some of the methods described here. - Explain what precision and recall are. How do they relate to the ROC curve?

For precision and recall, WiKi already have a nice answer for this, see it here. The following figure from WiKi is a very nice illustration of the two concept:

Example

Let's have a simple example to show this more: if there are 100 iron man, and 100 spider man, and you want to build a model to determine which one is iron man. After you build the model, it shows the results in the following table. You can see from the table, we can have 4 different results:- True positive (TP) - If the model determine it is iron man, and it is true iron man.

- True negative (TN) - If the model determine it is spider man, and it is true spider man.

- False positive (FP) - If the model determine it is iron man, but it is actually a spider man.

- False negative (FN) - If the model determine it is spider man, but it is actually an iron man.

Est./True Iron man Spider man Est. Iron man 90 (TP) 60 (FP) Est. Spider man 10 (FN) 40 (TN) Est. stands for Estimate

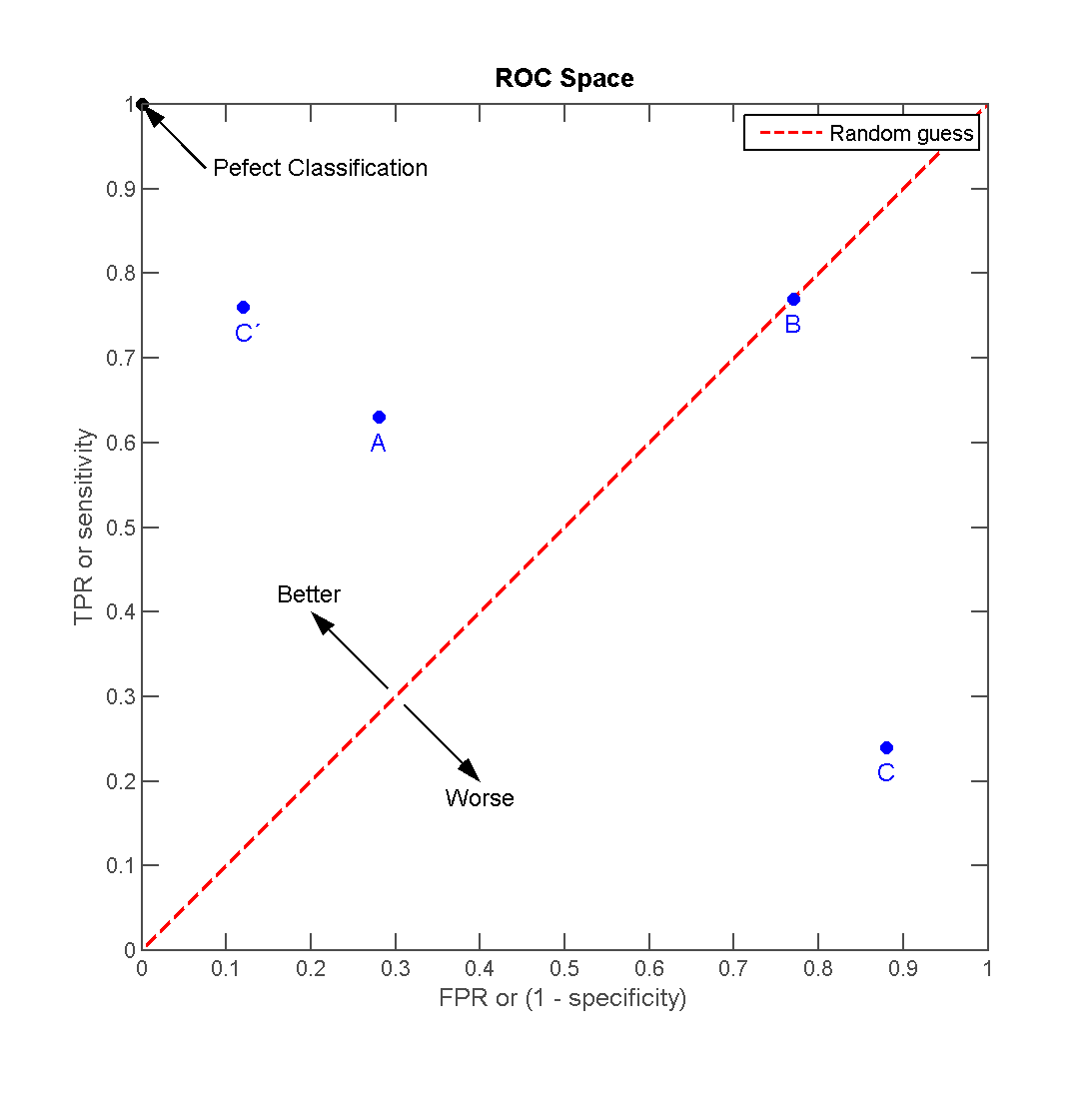

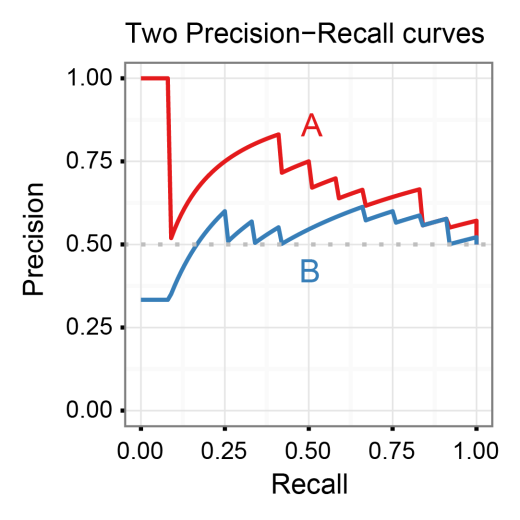

We estimated there are 150 Iron man, but in which, 90 of them are true iron man (TP), and 60 of them are actually spider man (FP). And 40 spider man estimates are real (TN), 10 estimates are wrong (FN). Now you can think about this with the above figure.Let's calculate the Precision and Recall now, Precision is to answer the question 'Within all the iron mans the model estimated, how many of them are real?'. Recall tries to answer 'Within all the real iron mans, how many the model correctly found them?'. (Recall also calls True Positive Rate, Sensitivity)Precision = TP / (TP + FP) = 90 / (90 + 60) = 0.6 Recall = TP / (TP + FN) = 90 / (90 + 10) = 0.9We can see from the results that our model did a decent job of picking out iron man (9 out of 10 are picked out), but at the same time, it is estimated a lot of the spider man as iron man, which means we have a relatively low precision (only 0.6).If we plot the Recall on the x-axis, and the Precision on the y-axis while vary the threshold of the model, then we will have a Precision-Recall curve. It is a model-wide measure for evaluation binary classifiers. Of course, we want our model has high Recall and high Precision, that is, we want our model to move close to the right corner on the plot. It usually can also be used to compare different models, see the example here:The ROC curve (Receiver Operating Characteristic) is a plot that illustrates the performance of a binary classifier when the threshold of the classifier is varied. It plots the False Positive Rate (100 - Specificity) on the x-axis, and True Positive Rate (Recall/Sensitivity) on the y-axis. We know how to calculate Recall from above, but for the False Positive Rate, which tries to answer 'Within all the real spider mans, how many of the model estimated as iron man?', is calculated as:False Positive Rate = FP / (FP + TN) = 60 / (60 + 40) = 0.6Therefore, on the ROC curve, we want our model to have a small False Positive Rate, but a large True Positive Rate (Recall), that is, we want our model to move close to the left corner. See an example here from WiKi: We can see the Precision-Recall curve and the ROC curve are closely related with each other, but the key differences between them are lie on what your problem need. If your problem have an inbalanced dataset, that is you have a few of positive samples but a lot of negative samples (100 iron man, and 100000 spider man), and you are more care of finding the iron man out, then you should use Precision-Recall curve. Otherwise, you can use ROC curve. See a nice discussion on Quora here.For more details, there is a very nice paper The Relationship Between Precision-Recall and ROC Curves.

We can see the Precision-Recall curve and the ROC curve are closely related with each other, but the key differences between them are lie on what your problem need. If your problem have an inbalanced dataset, that is you have a few of positive samples but a lot of negative samples (100 iron man, and 100000 spider man), and you are more care of finding the iron man out, then you should use Precision-Recall curve. Otherwise, you can use ROC curve. See a nice discussion on Quora here.For more details, there is a very nice paper The Relationship Between Precision-Recall and ROC Curves. - How can you prove that one improvement you've brought to an algorithm is really an improvement over not doing anything?

My answer to this question is simply do a A/B testing. With the same settings (dataset, hardware environment etc.), we can run both the older version of the algorithm and the modified version, and then compare the results in terms of performance (accuracy, speed, usage of resources, etc.). - What is root cause analysis?

I am not so familiar with this analysis. But it seems a technique to identify the underlying causes of why something occurred (usually bad accidents), so that the most effective solutions can be identified and implemented. It is a method of problem solving used for identifying the root causes, according to WiKi. - Are you familiar with pricing optimization, price elasticity, inventory management, competitive intelligence? Give examples.

No. I am not familiar with this at all. But a quick check with WiKi seems give enough answer.Pricing optimization: With the optimization in the term, I think we can guess most of it. This is usually used in industry to analyze the response of the customers to the change of the price of the product, and how the company set the price for a product to maximize the profit.price elasticity: a measure used in economics to show the responsiveness, or elasticity, of the quantity demanded of a good or service to a change in its price. More precisely, it gives the percentage change in quantity demanded in response to a one percent change in price.inventory management: How to efficiently manage the product in inventory so that the company can reduce the cost while still can provide the customers with timely product. Amazon is doing a really good job on this I am guessing.competitive intelligence: means understanding and learning what's happening in the world outside your business so you can be as competitive as possible. It means learning as much as possible--as soon as possible--about your industry in general, your competitors, or even your county's particular zoning rules. In short, it empowers you to anticipate and face challenges head on. - What is statistical power?

Statistical power is the likelihood that a study will detect an effect when there is an effect there to be detected referred from here. It depends mainly on the effect size, the sample size. Here is a very nice analogy to understand more of the statistical power. - Explain what resampling methods are and why they are useful. Also explain their limitations.

Resampling methods differs from the classical parametric tests that compare observed statistics to theoretical sampling distributions, it is based on repeated sampling within the same sample to draw the inference. The resampling methods are simpler and more accurate, require fewer assumptions, and have greater generalizability.Resampling can be used for different purposes, and the most common ones are:- To validate models - methods like bootstrap, cross-validation. For example, we usually can use 10-Fold cross-validation to validate our model on limited dataset, or to choose a desire parameters.

- Estimating the precision/uncertainty of sample statistics (medians, variance, etc), like jackknife, bootstrap. For example, in order to get a confidence interval of certain parameters we interested, we can use bootstrap to achieve that.

- To test hypotheses of 'no effect', like Permutation test which exchanges labels on data points to test statistic under the null hypothesis in order to perform a statistical significance test.

- To approximate the numerical results, like Monte Carlo, which rely on repeated random sampling from the known characteristics. Like in the MCMC used in Bayesian statistics, which we can get the posterior distribution by sampling that is impossible to get a analytics solution.

Jake VanderPlas gave a nice talk on PyCon 2016, which briefly touched some parts of the resampling method, you can find the video of the talk here, and the slides here. For further readings:

A gentle introduction to Resampling techniques.

Resampling Statistics.

Resampling methods: Concepts, Applications, and Justification.For the limitations,- Computationally heavy. It is only recent years with the advance of the computer power, the resampling method starts to get popular.

- Variability due to finite replications. Also, for the validation can be highly variable.

- Is it better to have too many false positives, or too many false negatives? Explain.

This depends on your need and the consequences of the decision. I will give two examples.(1) If we are working in a top secret institution, we have an automatic door that check if the person wants to enter it is a stuff or stranger, and we want to stop the strangers for security reasons - we want to identify the strangers who are not working here. If a staff is stopped by the door, because the algorithm thinks he/she is a stranger, this is the case of false negatives. If the algorithm fails to detect a stranger and let him/her in, this is a false positives. In this example, we'd rather to have many false negatives, that is, stop the staff due to recognize him/her as a stranger. The consequence is just to re-check the staff by authorities. If we have too many false positives, we will have a lot of strangers enter into our institution and may have a big security problem!(2) In a hospital, we want an algorithm to identify if the patient has malignant tumor for further treatment. The algorithm will classify the patient either as benign or malignant tumor. If a patient has benign tumor but classified as malignant, this is a false positives. A false negatives is when the patient has a malignant tumor but classified as benign. In this example, we prefer the algorithm to have more false positives. Since the consequence is for the patient to do more tests to confirm. But if we have a high false negatives, we will miss a lot of the patient who has the malignant tumor for the best treatment time.

Great blog.you put Good stuff.All the topics were explained briefly.so quickly understand for me.I am waiting for your next fantastic blog.Thanks for sharing.Any coures related details learn...

ReplyDeleteData science training in Marathahalli|

Data science training in Bangalore|

Hadoop Training in Marathahalli|

Hadoop Training in Bangalore|

Thank you so much for sharing this worth able content with us. The concept taken here will be useful for my future programs and i will surely implement them in my study. Keep blogging article like this.

ReplyDeleteData Science Training in Chennai | Java Training in Chennai

nice it is a good information.Data Science Online Training india

ReplyDeleteKeep writing wonderful blogs like this, I would like to read more blogs.

ReplyDeleteSelenium Training in Chennai

selenium Classes in chennai

iOS Training in Chennai

French Classes in Chennai

Big Data Training in Chennai

PHP Training in Chennai

PHP Course in Chennai

Its a wonderful post and very helpful, thanks for all this information.

ReplyDeleteData Science Training institute in Noida

ReplyDeleteThanks for sharing,the entire post absolutely rocks.Very clear and understandable content.I enjoyed reading your post and it helped me a lot.Keep posting.I heard about an AWS training in Bangalore and pune where they provide you certification course as well as placement assistant.If you are looking for any such courses please visit the site

data science training institute in bangalore

This is most informative and also this post most user friendly and super navigation to all posts. Thank you so much for giving this information to me.datascience with python training in bangalore

ReplyDelete"Thank you for sharing wonderful information with us.Really useful for everyone data scientist courses

ReplyDelete"

Thank you for sharing wonderful information with us.Really useful for everyonedata scientist courses

ReplyDeleteNice content very helpful, It has a very important point which should be noted down. All points mentioned and very well written. Keep Posting & writing such content

ReplyDeleteAWS Online Training

Nice article and thanks for sharing with us. Its very informative

ReplyDeletePlots in THIMMAPUR

Very informative post! There is a lot of information here that can help any business get started with a successful social networking campaign.

ReplyDeleteWeb Design Cheltenham

Local SEO Company Gloucester

Best SEO Company Uk

Locam SEO Company Cheltenham

SEO Agency Cheltenham

Local SEO Agency

Great Blog to read, It gives more useful information. Thank lot.

ReplyDeleteData Science Training In Bangalore

Best Data Science Training Institute in Bangalore

Five weeks ago my boyfriend broke up with me. It all started when i went to summer camp i was trying to contact him but it was not going through. So when I came back from camp I saw him with a young lady kissing in his bed room, I was frustrated and it gave me a sleepless night. I thought he will come back to apologies but he didn't come for almost three week i was really hurt but i thank Dr.Azuka for all he did i met Dr.Azuka during my search at the internet i decided to contact him on his email dr.azukasolutionhome@gmail.com he brought my boyfriend back to me just within 48 hours i am really happy. What’s app contact : +44 7520 636249

ReplyDeleteHey, thanks for the blog article.Really looking forward to read more. Cool.

ReplyDeletedata science training

python training

angular js training

How I Got My Husband back... Am so excited to share my testimony of a spell caster who brought my husband back to me. My husband and I have been married for about 6 years now. We were happily married with two kids, a boy and a girl. 3 months ago, I started to notice some strange behavior from him and a few weeks later I found out that my husband is seeing someone else. He started coming home late from work, he hardly cared about me or the kids anymore, Sometimes he goes out and doesn't even come back home for about 2-3 days. I did all I could to rectify this problem but all to no avail. I became very worried and needed help. As I was browsing through the Internet one day, I came across a website that suggested that Dr.Odu adebayo can help solve marital problems, restore broken relationships and so on. So, I felt I should give him a try. I contacted(WhatsApp) him and told him my problems and he told me what to do and I did it and he did a spell for me. 48 hours later, my husband came to me and apologized for the wrongs he did and promised never to do it again. Ever since then, everything has returned back to normal. My family are living together happily again.. All thanks to Dr.odu adebayo If you have any problem contact him and I guarantee you that he will help you. He will not disappoint you. you can WhatsApp him(+2347055287754)or Email him at : poweroflovespell@gmail.com.

ReplyDeleteNice blog. I appreciate your efforts on this blog. Keep sharing some more blogs again soon.

ReplyDeleteData Science Training

Really enjoyed this article.Much thanks again. Want more

ReplyDeletedata science course in hyderabad

data science training in hyderabad

Salesforce Course in Pune

ReplyDeleteSalesforce is built on a combination of technologies that collectively form the foundation of its development and functionality. Some of the key technologies used in Salesforce development include:

Apex: Apex is a proprietary programming language developed by Salesforce specifically for building applications on the Salesforce platform. It is similar to Java and is used to customize and extend the platform's capabilities, create business logic, and interact with the Salesforce database.

Visualforce: Visualforce is a markup language used to create user interfaces in Salesforce. It allows developers to design custom user interfaces using HTML, CSS, and Visualforce tags. Visualforce pages can be used to build custom layouts, forms, and dashboards within Salesforce.

Lightning Web Components (LWC): Lightning Web Components is a modern, standards-based framework introduced by Salesforce to develop efficient and responsive user interfaces. LWC uses JavaScript and HTML to create reusable components that work seamlessly within the Salesforce Lightning Experience.

Lightning Aura Framework: The Lightning Aura Framework is an earlier Salesforce framework used to create dynamic and interactive user interfaces within the Salesforce Lightning Experience. It utilizes JavaScript and Aura components to deliver rich user experiences.

Salesforce Object Query Language (SOQL): SOQL is used to query and manipulate data stored in Salesforce. It is similar to SQL (Structured Query Language) and allows developers to retrieve, insert, update, and delete records from Salesforce databases.

Salesforce REST API and SOAP API: Salesforce provides REST and SOAP APIs that enable developers to interact with Salesforce data and functionalities programmatically. These APIs allow integration with external systems, mobile applications, and custom web applications.

Salesforce Platform: Salesforce provides a cloud-based platform that includes a robust infrastructure, security mechanisms, and scalability to support the development and deployment of applications.

Heroku: Salesforce acquired Heroku, a cloud-based platform-as-a-service (PaaS) provider, to extend its development capabilities. Heroku allows developers to build, deploy, and manage applications using popular programming languages like Ruby, Node.js, and Python.